As AI takes on more of the software development process, how do we preserve a sense of personal authorship in what we create?

Traditionally, building software means typing out each line of code by hand or fiddling with property panels in design tools to tweak every last element. This offers precise control but can be both time-consuming and intimidating for non-experts.

AI shifts this dynamic by letting you jump from a broad, sometimes fuzzy description directly to a finished product.

The tradeoff, is specificity. The less detail you provide, the more room there is for AI to interpret—and possibly misinterpret—your vision. But this openness can also spark serendipity, revealing possibilities you hadn't considered.

When someone says dog, what kind of dog do they mean? What art style? Doing what pose?

When someone says dog, what kind of dog do they mean? What art style? Doing what pose?

Mosaic is a collection of prototypes designed to reimagine these tools as a wayfinding and discovery process. If you have a meticulously detailed spec, the system follows it exactly. But if you only have a notion—like “fire and ice” or “sci-fi romance”—the AI can generate a range of interpretations, each capturing the desired vibe in its own way. You pick the direction that resonates most, then iterate on related variations to refine things further.

By framing creation in this way, we lower the barrier to creating designs without losing the personal touch. The AI can pull from meaningful sources of inspiration—like a photo from your travels, an artwork you admire, or the contents of a blog post—capturing the intangible qualities behind them. It then maps those themes or moods onto design decisions, enabling you to explore the many ways these influences can be expressed.

So what does Mosaic look like in practice?

High-dimensional, interpretable vectors

Early on we tried interpolating between properties in a design directly

We interpolated between the content of a design

And even the structure of the components

But none of these were getting to the heart of the problem we were trying to solve.

Mosaic represents design elements—fonts, color palettes, borders, spacing, svg textures and gradients—as high dimensional vectors. This enables us to interpolate between concepts and semantically merge aesthetics. It’s a way of manipulating style at a higher level, rather than dealing with individual properties.

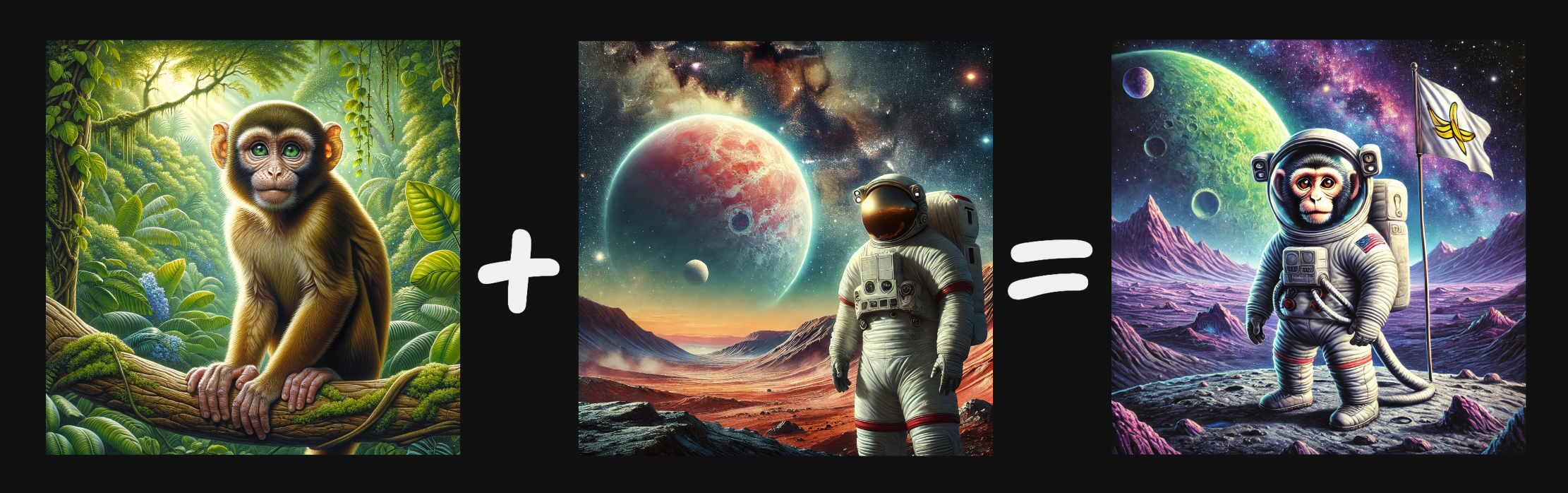

It isn't enough to just interpolate between ideas, we need the ability to coherently merge concepts.

It isn't enough to just interpolate between ideas, we need the ability to coherently merge concepts.

We're able to do this by generating large amounts of synthetic data using LLMs and vision models and then using AI to semantically sort and tag these elements.

Interpreting the themes and aesthetics of media

Mosaic is highly multi-modal, it can interpret the essence of images and documents, it can be controlled by LLMs or agents and navigated with tactile controls.

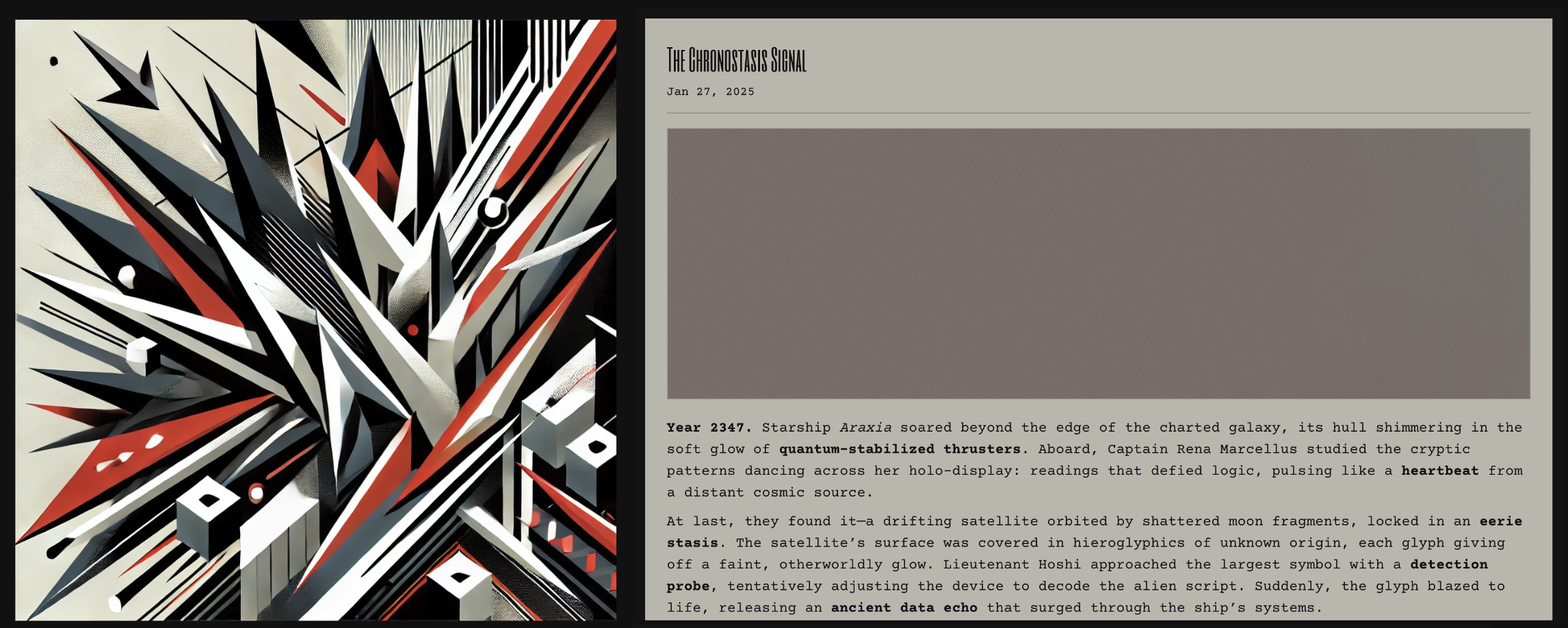

Here we've generated a theme by analysing an image using a combination of heuristics and vision model calls.

Here we've generated a theme by analysing an image using a combination of heuristics and vision model calls.

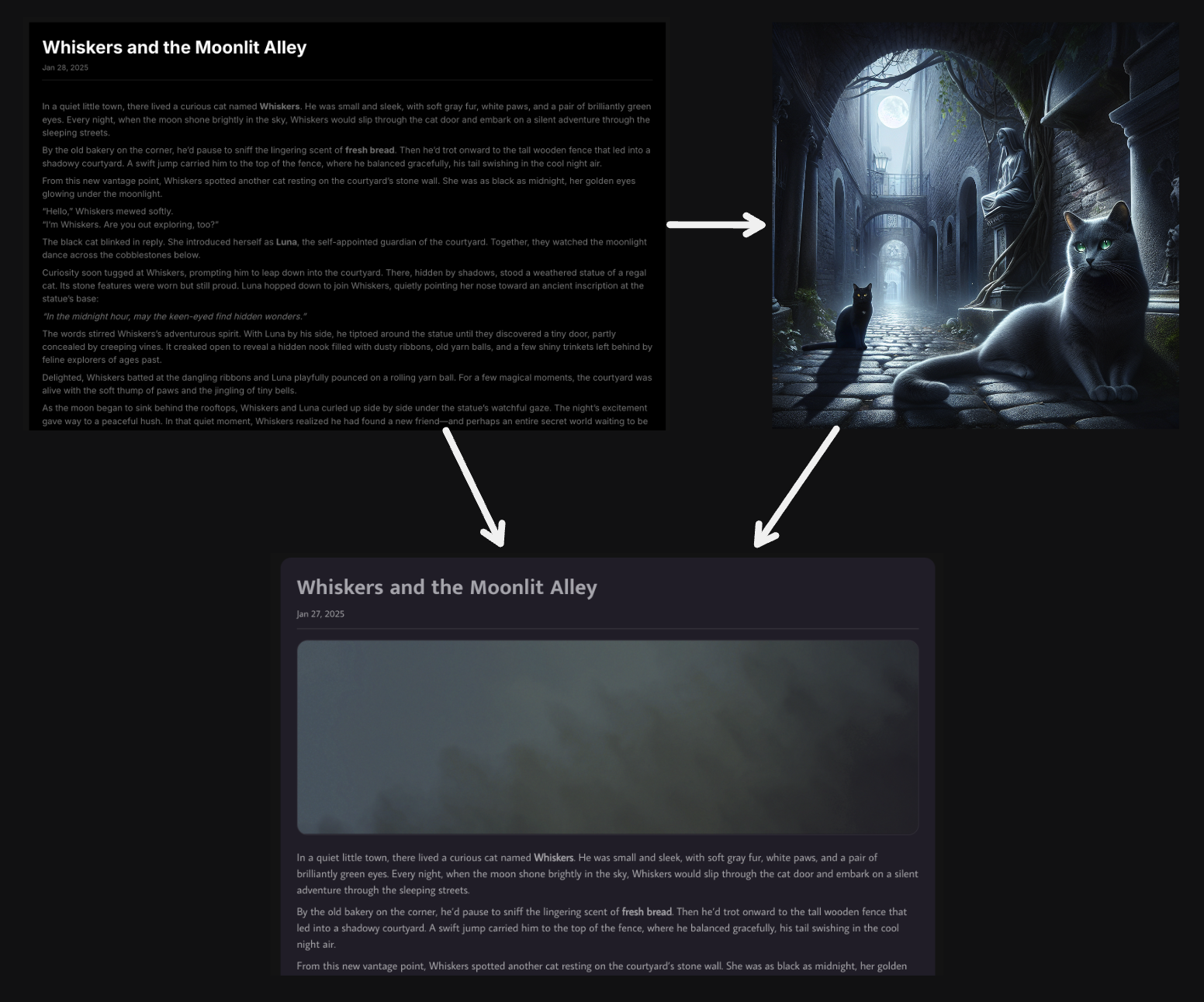

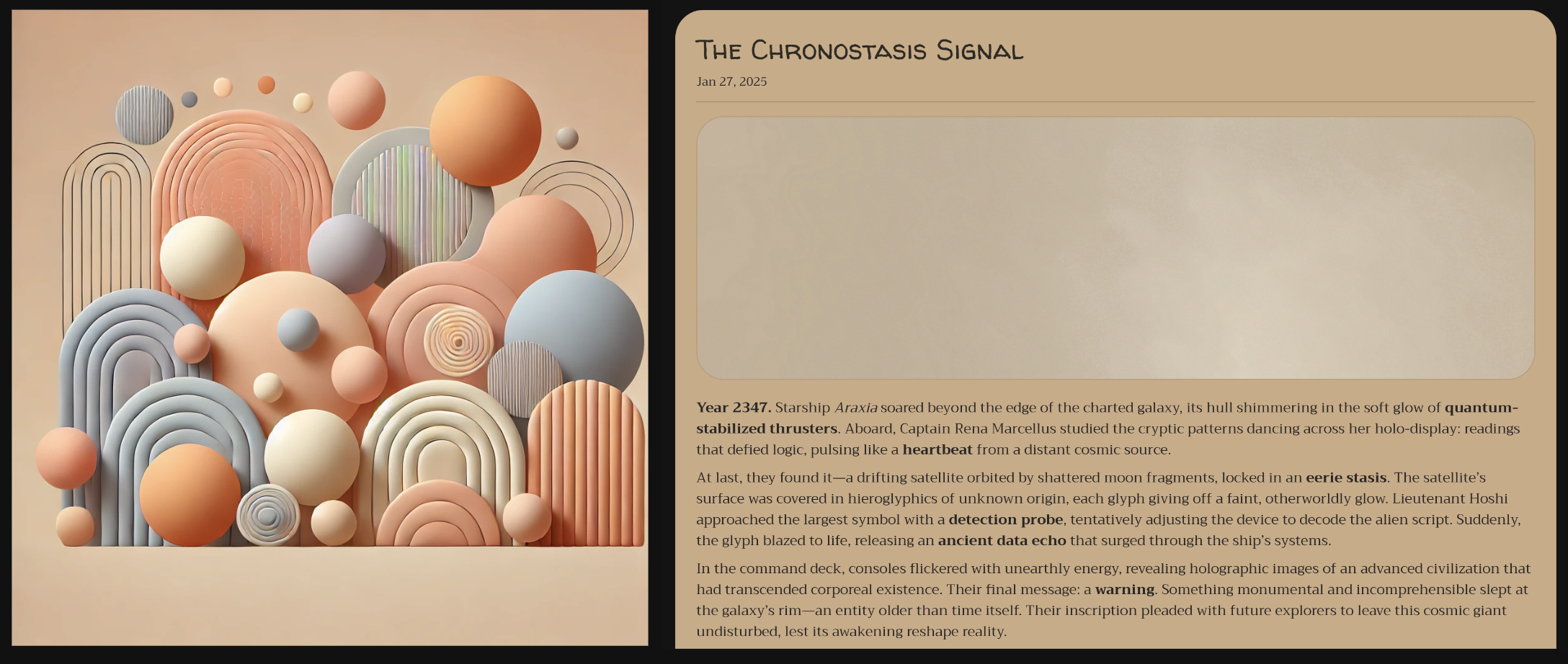

In this example we've pulled inspiration from the contents of a markdown file and used it to generate an image. We've then extracted the color palette from it and analysed the text for the rest of the design decisions.

In this example we've pulled inspiration from the contents of a markdown file and used it to generate an image. We've then extracted the color palette from it and analysed the text for the rest of the design decisions.

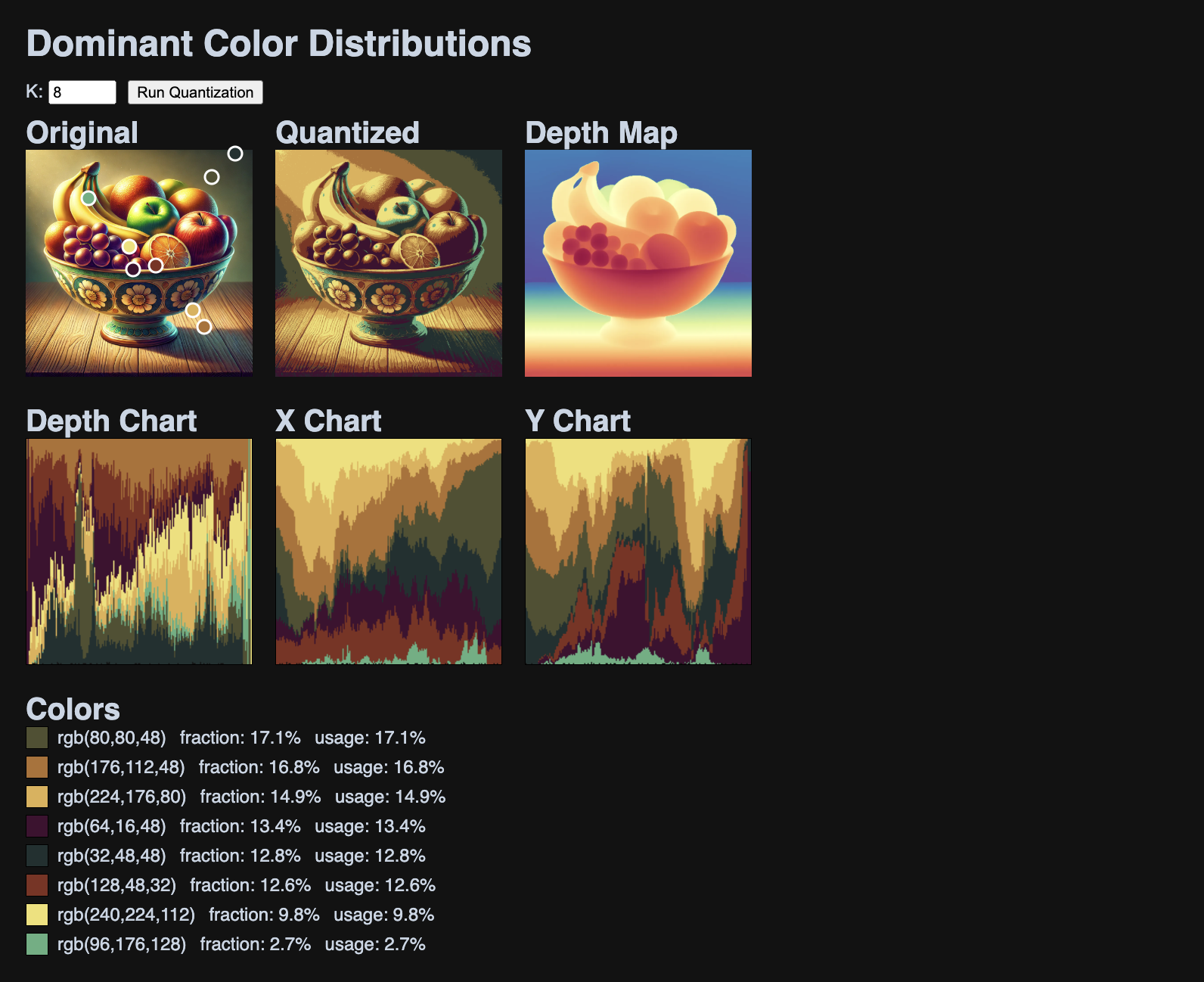

We analyze the dominant color usage in images to determine how colors should be applied to the design system.

Should colors used in the background of an image be applied to different elements than those in the foreground?

Should colors used in the background of an image be applied to different elements than those in the foreground?

We're not just using the dominant colors directly—instead we're using them to create custom RYB color spaces. This gives us the flexibility to sample colors from a much larger palette, while ensuring that the vibe of the image is maintained.

We don't just use heuristics, to understand the themes and aesthetics of the image, we also lean on LLMs fuzzy understanding of ideas. For example, we can determine if an image has sharp or soft shapes, and then apply that to the design system.

An image with sharp, jagged points will lead to fonts and border radius's that match.

An image with sharp, jagged points will lead to fonts and border radius's that match.

An image with soft, curved shapes and matching design elements.

An image with soft, curved shapes and matching design elements.

We can also infer whether the site should default to a light or dark theme based on the image.

It's likely that the user intended to create a light mode website when they shared the picture including the sun and a dark mode website when they shared the picture including the moon.

It's likely that the user intended to create a light mode website when they shared the picture including the sun and a dark mode website when they shared the picture including the moon.

Collecting and Remixing

Mosaic encourages you to gather inspiring references—whether it’s a design generated by Mosaic, an image, or a memorable quote—and to incorporate them into future projects. It helps you recycle the things you love and build on them.

Procedural Generation and Rapid Variation

Since each design choice is parameterized, Mosaic can generate thousands of variations on the fly. Once you’ve chosen a point in the vector space, you can steer towards related themes without additional LLM calls. This lets you quickly lock in what you love and discard what you don’t.

Steering Aesthetics with Sliders, Graphs & Filters

Mosaic provides intuitive controls like sliders and interactive graphs. Each control corresponds to a broad attribute—such as “warm” or “vibrant”—and moving it adjusts multiple parameters at once.

You can also filter by hierarchical categories (e.g. “serif” or “geometric-humanist”) to narrow your exploration. This approach turns each dimension into a lens for refining your design.

For example, here we are steering the selection of fonts using parameters.

It's difficult to visualise a large amount of parameters at once, so another UX paradigm we're exploring is to interpolate between the design elements themselves. This collapses the high dimensional parameters into 2D space, enabling you to see how the design elements change as you move between them.

Tools for Agents

By making design systems more interpretable, Mosaic also makes it easier for AI agents to generate and adapt designs in a visually coherent way. An agent can respond to higher-level instructions—like “use warm colors” or “aim for a rustic feel”—and still produce results that look intentional.

Here we're surfacing a list of related theme options using an LLM.

Here we're surfacing a list of related theme options using an LLM.

Data-Driven, Just-in-Time Design Systems

Mosaic supports both the creation of static designs as well as ones that adapt to their context.

Brands can define their core boundaries—like certain fonts or colors—while letting content or user interactions shape the final look and feel. This opens the door to more dynamic experiences, such as a blog that adapts to the mood of each post, or a site that shifts aesthetics by season.

It’s a move away from rigid guidelines, toward adaptable systems that still feel coherent.

A related analogy is the difference between a linear video game and an open-world one. In the former, you’re guided through a fixed path; in the latter, you can explore and shape the world around you. Mosaic brings that same sense of freedom and agency to design, letting you wander and experiment.

What’s next?

Mosaic is still in its early stages. We have the system’s core properties working, but there’s a lot more to explore—particularly in finding cohesive pairings across design-system attributes. Moving beyond basic theming, we’re looking to extend this approach to primitive components like buttons and input fields, and eventually entire layouts.

We also see potential in auto-generating design system documentation to guide both human creators and AI agents. Tying these methods into generative image and text systems could further align visual and written content with the design system’s overall style.